Python AI Language Models

- (Stanford University - Alvin Wei-Cheng Wong)

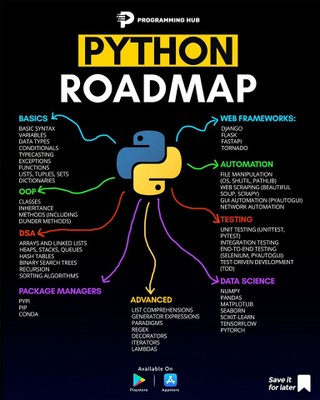

- Overview

Python is a widely used programming language in the field of artificial intelligence (AI), including the development and application of language models.

Its popularity stems from its readability, flexibility, and a vast ecosystem of libraries that support various AI tasks.

In essence, Python's comprehensive set of tools and libraries makes it the dominant language for research, development, and deployment of artificial intelligence, particularly within the domain of language models.

Key aspects of Python's role in AI language models:

- Deep Learning Frameworks: Python provides access to popular deep learning frameworks like TensorFlow, PyTorch, and Keras, which are essential for building and training large language models (LLMs). These frameworks offer tools for defining neural network architectures, managing data, and optimizing models.

- Natural Language Processing (NLP) Libraries: Libraries such as NLTK and spaCy offer functionalities for common NLP tasks, including tokenization, parsing, and text analysis, which are foundational for working with language data in AI models.

- Transformer Models and Libraries: The Hugging Face Transformers library, built on Python, has become a cornerstone for working with state-of-the-art transformer-based LLMs like BERT, GPT, and T5. This library simplifies the process of loading, fine-tuning, and deploying these complex models.

- Data Manipulation and Analysis: Python's libraries like NumPy and Pandas are crucial for handling and preparing the large datasets required for training language models, enabling efficient data manipulation and analysis.

- Interactive Development: Jupyter Notebooks, a Python-based interactive computing environment, facilitate experimentation and rapid prototyping of language models, allowing researchers and developers to explore data and test model components iteratively.

Please refer to the following for more information:

- Wikipedia: List of Programming Languages for AI

- Key Aspects and Examples of Python in AI Language Models

Python plays a central role in the development and application of AI language models, particularly Large Language Models (LLMs).

Its extensive ecosystem of libraries and frameworks makes it a preferred choice for building, training, and deploying these models.

1. Libraries and Frameworks:

Python offers a rich collection of specialized libraries that facilitate various stages of language model development:

- Deep Learning Frameworks: TensorFlow and PyTorch are widely used for building and training neural networks, which form the core of modern language models.

- High-level APIs: Keras simplifies the creation of neural networks, providing a user-friendly interface on top of frameworks like TensorFlow.

- Data Manipulation and Analysis: Pandas and NumPy are essential for handling and processing the large datasets required for training language models.

- Natural Language Processing (NLP) Libraries: Libraries like NLTK and SpaCy provide tools for text preprocessing, tokenization, and other NLP tasks.

- Model Building and Deployment: Libraries like Hugging Face Transformers offer pre-trained models and tools for fine-tuning and deploying LLMs.

- Agentic Frameworks: LangChain enables the construction of LLM-powered AI agents capable of complex interactions and task execution.

2. Accessibility and Community:

- Python's ease of use and large, active community contribute to its widespread adoption in AI. This fosters a collaborative environment where developers can readily access resources, share knowledge, and leverage existing tools.

3. Integration and Deployment:

- Python's versatility allows for seamless integration of language models into various applications and platforms, from web services to mobile apps.

4. Examples

- Developing and training LLMs: Researchers and developers use Python to build and train models like GPT, Gemini, Claude, and LLaMA, leveraging its deep learning capabilities.

- Fine-tuning and customizing models: Python allows for fine-tuning pre-trained models on specific datasets to adapt them to particular tasks or domains.

- Building AI agents: Frameworks like LangChain, written in Python, enable the creation of intelligent agents that can interact with users, access external tools, and automate complex workflows.

- Data preprocessing and analysis: Python's data science libraries are crucial for preparing and analyzing the vast amounts of text data used in language model training.

- Python Libraries for Training AI Language Models

Python is a popular programming language for AI development, and there are a number of Python libraries that can be used to create and train AI language models.

Some of the most popular Python libraries for AI development include:

- TensorFlow: TensorFlow is an open-source software library for numerical computation using data flow graphs. It is used for machine learning and deep learning.

- PyTorch: PyTorch is an open-source machine learning framework based on the Torch library. It is used for deep learning research and development.

- Scikit-learn: Scikit-learn is an open-source machine learning library for Python. It includes a wide variety of machine learning algorithms, including classification, regression, clustering, and dimensionality reduction.

- Resources for Learning AI Language Models

- The TensorFlow website: The TensorFlow website provides a number of tutorials and resources for getting started with TensorFlow.

- The PyTorch website: The PyTorch website provides a number of tutorials and resources for getting started with PyTorch.

- The Scikit-learn website: The Scikit-learn website provides a number of tutorials and resources for getting started with Scikit-learn.

- ML Algorithms in Python

- Linear regression: Linear regression is a supervised learning algorithm that is used to predict continuous values. It works by finding a linear relationship between the independent and dependent variables.

- Decision trees: Decision trees are supervised learning algorithms that are used to classify data. They work by recursively splitting the data into smaller and smaller subsets until each subset contains only data points of the same class.

- Support vector machines (SVMs): SVMs are supervised learning algorithms that are used to classify data. They work by finding a hyperplane that separates the data into two classes.

- Random forests: Random forests are ensemble learning algorithms that combine the predictions of multiple decision trees to produce a more accurate prediction.

- K-nearest neighbors (KNN): KNN is a supervised learning algorithm that is used to classify data. It works by finding the K most similar data points to a new data point and then predicting the class of the new data point based on the classes of the K nearest neighbors.